Rare mutation detection model

Last updated: 2023-03-07

Checks: 7 0

Knit directory: G000204_duplex/

This reproducible R Markdown analysis was created with workflowr (version 1.7.0). The Checks tab describes the reproducibility checks that were applied when the results were created. The Past versions tab lists the development history.

Great! Since the R Markdown file has been committed to the Git repository, you know the exact version of the code that produced these results.

Great job! The global environment was empty. Objects defined in the global environment can affect the analysis in your R Markdown file in unknown ways. For reproduciblity it’s best to always run the code in an empty environment.

The command set.seed(20210916) was run prior to running

the code in the R Markdown file. Setting a seed ensures that any results

that rely on randomness, e.g. subsampling or permutations, are

reproducible.

Great job! Recording the operating system, R version, and package versions is critical for reproducibility.

Nice! There were no cached chunks for this analysis, so you can be confident that you successfully produced the results during this run.

Great job! Using relative paths to the files within your workflowr project makes it easier to run your code on other machines.

Great! You are using Git for version control. Tracking code development and connecting the code version to the results is critical for reproducibility.

The results in this page were generated with repository version a38edaa. See the Past versions tab to see a history of the changes made to the R Markdown and HTML files.

Note that you need to be careful to ensure that all relevant files for

the analysis have been committed to Git prior to generating the results

(you can use wflow_publish or

wflow_git_commit). workflowr only checks the R Markdown

file, but you know if there are other scripts or data files that it

depends on. Below is the status of the Git repository when the results

were generated:

Ignored files:

Ignored: .DS_Store

Ignored: .Rapp.history

Ignored: .Rhistory

Ignored: .Rproj.user/

Ignored: analysis/.DS_Store

Ignored: analysis/cache/

Ignored: data/.DS_Store

Ignored: scripts/

Untracked files:

Untracked: ._.DS_Store

Untracked: ._rare-mutation-detection.Rproj

Untracked: DOCNAME

Untracked: analysis/._.DS_Store

Untracked: analysis/._ecoli_spikeins.Rmd

Untracked: analysis/calc_nanoseq_metrics.Rmd

Untracked: data/._.DS_Store

Untracked: data/._metrics.rds

Untracked: data/ecoli/

Untracked: data/ecoli_k12_metrics.rds

Untracked: data/metadata/

Untracked: data/metrics_efficiency_nossc.rds

Untracked: data/metrics_spikeins.rds

Untracked: data/mixtures

Untracked: data/ref/

Untracked: drop_out_rate.pdf

Untracked: efficiency.pdf

Untracked: prototype_code/

Untracked: stats.csv

Note that any generated files, e.g. HTML, png, CSS, etc., are not included in this status report because it is ok for generated content to have uncommitted changes.

These are the previous versions of the repository in which changes were

made to the R Markdown (analysis/model.Rmd) and HTML

(docs/model.html) files. If you’ve configured a remote Git

repository (see ?wflow_git_remote), click on the hyperlinks

in the table below to view the files as they were in that past version.

| File | Version | Author | Date | Message |

|---|---|---|---|---|

| Rmd | a38edaa | mcmero | 2023-03-07 | Added coverage estimations using estimated efficiency |

| html | 8f81f70 | Marek Cmero | 2022-11-11 | Build site. |

| Rmd | eba33a5 | Marek Cmero | 2022-11-11 | Added sequencing depth and capture size example experiment table |

| html | f90d40a | Marek Cmero | 2022-08-18 | Build site. |

| Rmd | 7ff227e | Marek Cmero | 2022-08-18 | Added downsampling experiments and some presentation-only plots. |

| html | ede1103 | Marek Cmero | 2022-08-11 | Build site. |

| Rmd | e169b95 | Marek Cmero | 2022-08-11 | Added downsampling effect on duplicate rate toy example |

| html | 22d32ef | Marek Cmero | 2022-05-31 | Build site. |

| Rmd | e722dc0 | Marek Cmero | 2022-05-31 | Fix typo, minor code update |

| html | b524238 | Marek Cmero | 2022-05-26 | Build site. |

| Rmd | afd79e5 | Marek Cmero | 2022-05-26 | Added revised model, in silico mixtures redone with 1 supporting read, added input cell estimates |

| html | 0b0a0dd | Marek Cmero | 2022-05-12 | Build site. |

| Rmd | 3c84b8f | Marek Cmero | 2022-05-12 | update model for haploid genome |

| html | 0e46464 | Marek Cmero | 2022-04-11 | Build site. |

| Rmd | f5ca0c0 | Marek Cmero | 2022-04-11 | added mixture experiment calculation |

| html | cde303e | Marek Cmero | 2022-02-17 | Build site. |

| Rmd | 7cfe3e4 | Marek Cmero | 2022-02-17 | Updated/simplified model |

| html | e5ed9a7 | Marek Cmero | 2021-12-15 | Build site. |

| html | 5a3c6b1 | Marek Cmero | 2021-12-15 | Build site. |

| html | cbb4886 | mcmero | 2021-09-22 | Build site. |

| Rmd | cf87608 | mcmero | 2021-09-22 | Added equation |

| Rmd | 5cb4f4d | mcmero | 2021-09-22 | Fix probability of selecting mutant fragment |

| html | 5cb4f4d | mcmero | 2021-09-22 | Fix probability of selecting mutant fragment |

| html | 2f677c2 | mcmero | 2021-09-22 | Build site. |

| Rmd | de62c5c | mcmero | 2021-09-22 |

|

| html | de62c5c | mcmero | 2021-09-22 |

|

| html | b680ba0 | mcmero | 2021-09-17 | Build site. |

| Rmd | ebb9d74 | mcmero | 2021-09-17 | initial commit |

| html | ebb9d74 | mcmero | 2021-09-17 | initial commit |

library(ggplot2)

library(dplyr)Model

Q: how many cells (or “cell equivalents”) do we need to sequence to detect variants down to a VAF of X%

Assumptions

- We consider a haploid genome without SCNAs

- We’re not considering DNA extraction efficiency or ligation efficiency

Probability of sequencing a mutant cell

The probability of selecting a cell with a given variant from a pool of \(n\) cells is the variant allele frequency (VAF) \(v\). This indicates that a VAF of 0.01 means 1 cell in 100 is a mutant (\(nv\)).

We assume the probability of selecting a mutant cell is binomially distributed. We want to know the probability of selecting at least one mutant:

\(P(Bin(n, v)) > 0)\) = 0.95

This is equivalent to:

\(P(Bin(n, v)) = 0)\) = 0.05

Where \(n\) is the number of sequenced cells.

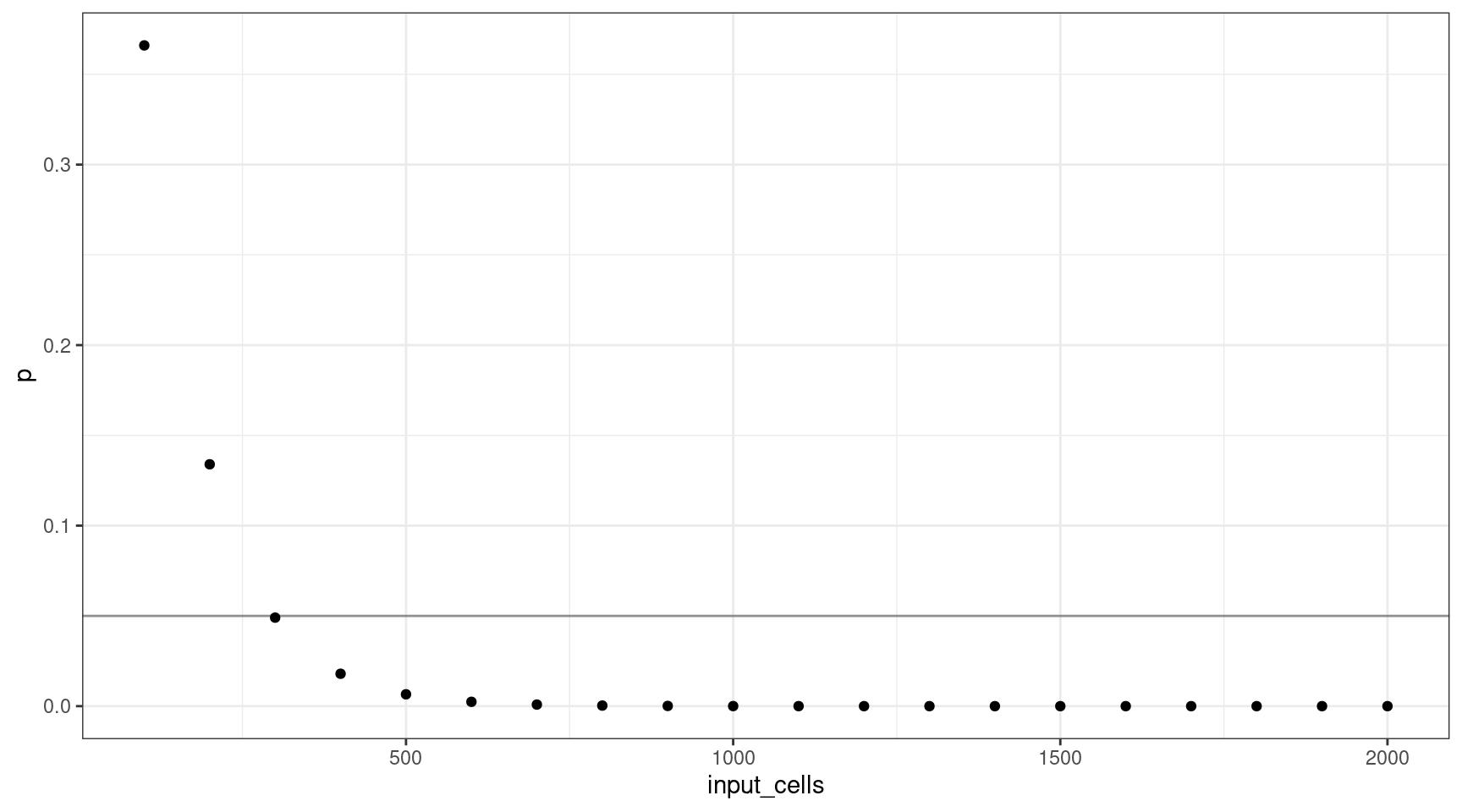

Let’s consider how many input cells (\(n\)) are required to select at least one mutant cell at 1% VAF (95% confidence).

We’ll consider 100 cell increments from \(n = \{100, 200..2000\}\). Using these values, we can plot the probability selecting of sequencing at least one cell.

v = 0.01

n = seq(100, 2000, 100)

vafs <- data.frame(vaf=v,

p=pbinom(0, n, v),

input_cells=n,

mutant_cells=(n * v))

ggplot(vafs, aes(input_cells, p)) +

geom_point() +

theme_bw() +

geom_hline(yintercept=0.05, alpha=0.4)

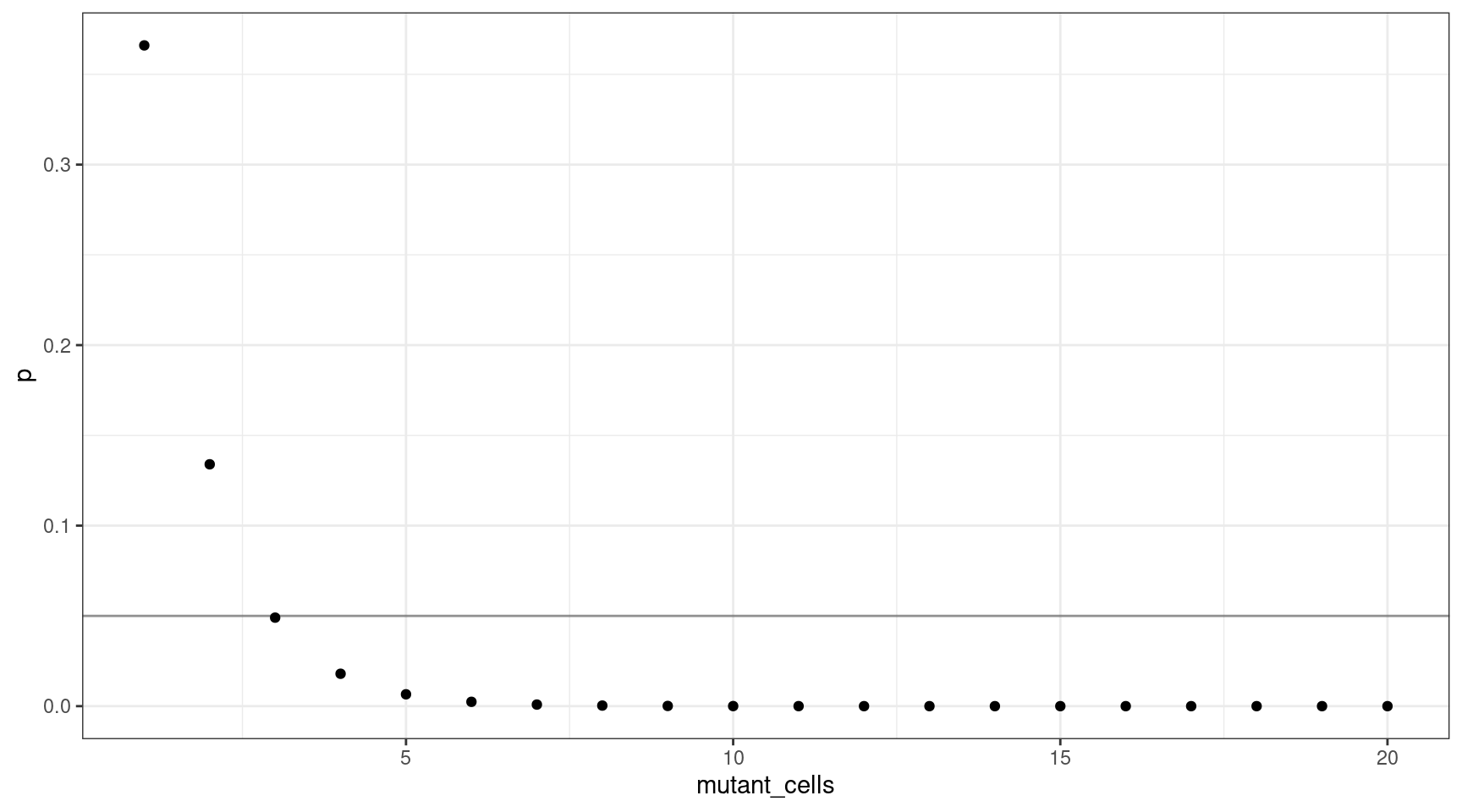

We can also plot this as mutant cells instead of VAF:

ggplot(vafs, aes(mutant_cells, p)) +

geom_point() +

theme_bw() +

geom_hline(yintercept=0.05, alpha=0.4)

This shows that for a VAF target of 1%, we should sequence at least 300 cells (3 are mutants).

deviation <- abs(0.05 - vafs$p)

print(vafs[which(deviation == min(deviation)),]) vaf p input_cells mutant_cells

3 0.01 0.04904089 300 3Varying the VAF

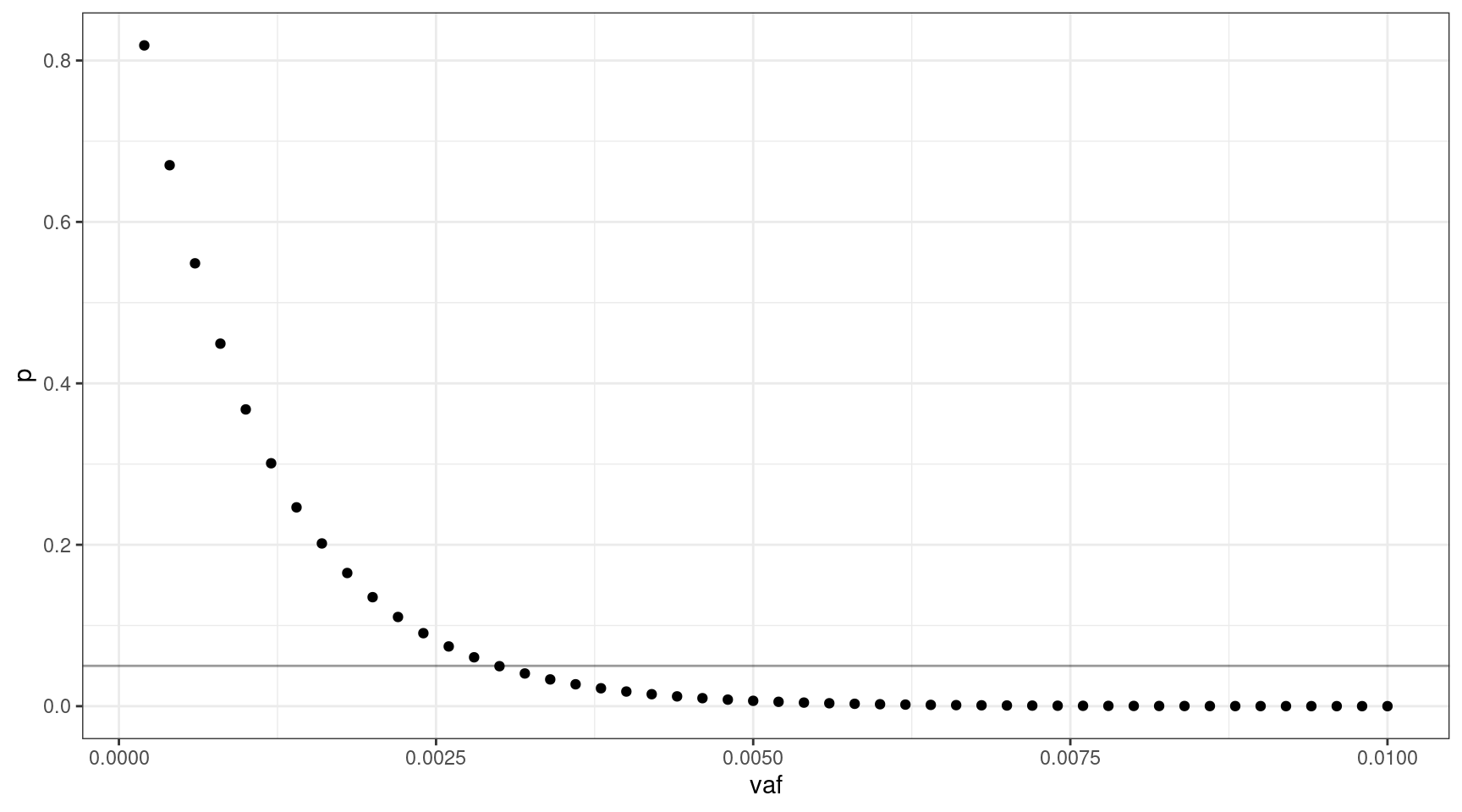

If we select 1000 input cells, what’s the lowest VAF we can sequence down to, where \(v = {0.0002, 0.0004..0.01}\).

v = seq(0.0002, 0.01, 0.0002)

n = 1000

vafs <- data.frame(vaf=v,

p=pbinom(0, n, v),

input_cells=n,

mutant_cells=(n * v))

ggplot(vafs, aes(vaf, p)) +

geom_point() +

theme_bw() +

geom_hline(yintercept=0.05, alpha=0.4)

We can sequence down to approx 0.3% VAF.

deviation <- abs(0.05 - vafs$p)

print(vafs[which(deviation == min(deviation)),]) vaf p input_cells mutant_cells

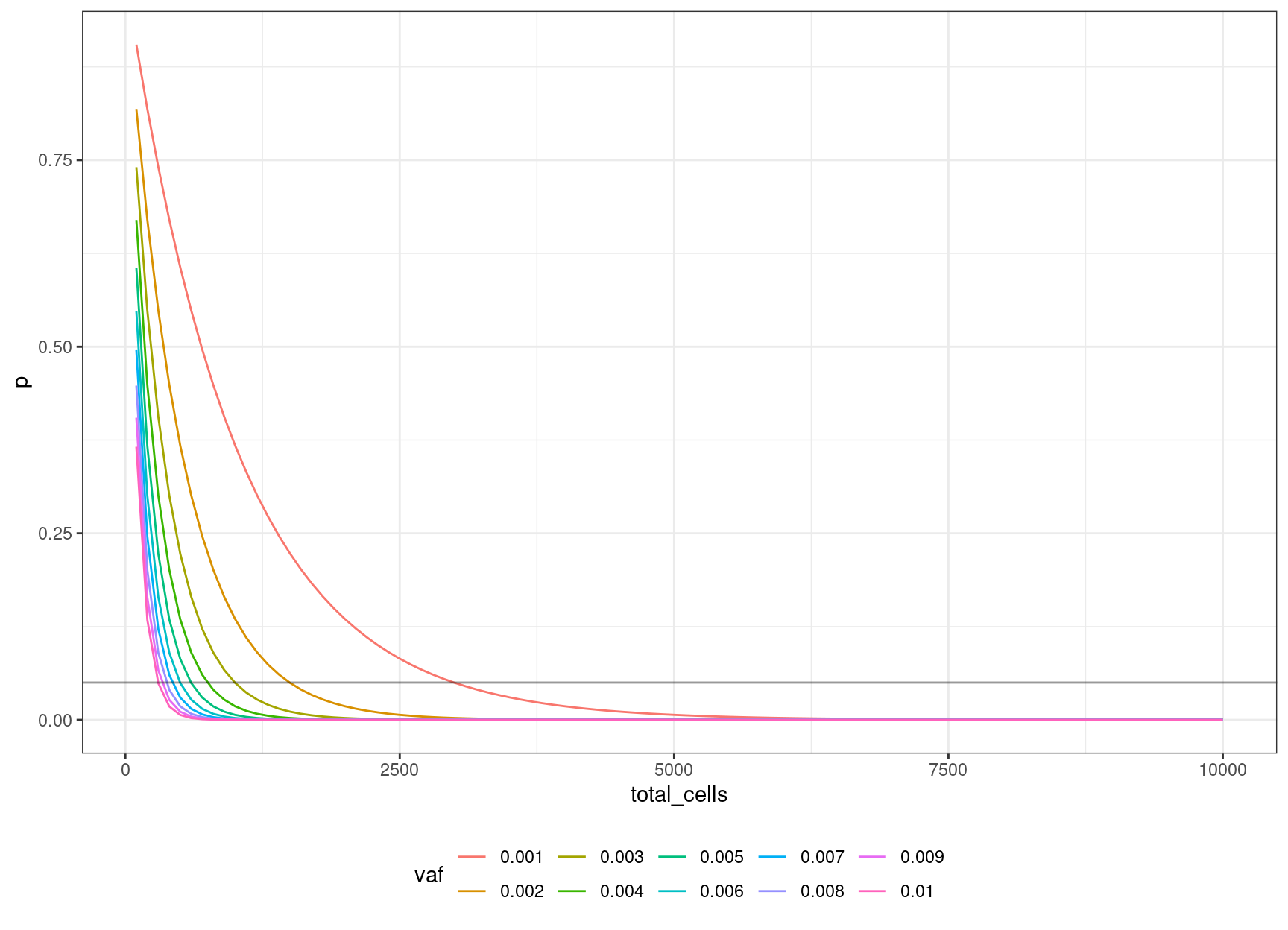

15 0.003 0.04956308 1000 3We can look at the relationship between input cells and allele frequency:

cells_vs_vaf = NULL

n = seq(100, 10000, 100)

V = seq(0.001, 0.01, 0.001)

for (v in V) {

toadd <- data.frame(

vaf=as.factor(v),

p=pbinom(0, n, v),

total_cells=n

)

cells_vs_vaf <- rbind(cells_vs_vaf, toadd)

}

ggplot(cells_vs_vaf, aes(total_cells, p, colour=vaf)) +

geom_line() +

theme_bw() +

theme(legend.position = 'bottom') +

geom_hline(yintercept=0.05, alpha=0.4)

We can define an equation based on the binomial probability calculation, of obtaining the number of cells to sequence, to be 95% confident of sequencing the mutation based on the target VAF:

\((1 – v) ^ n = 0.05\)

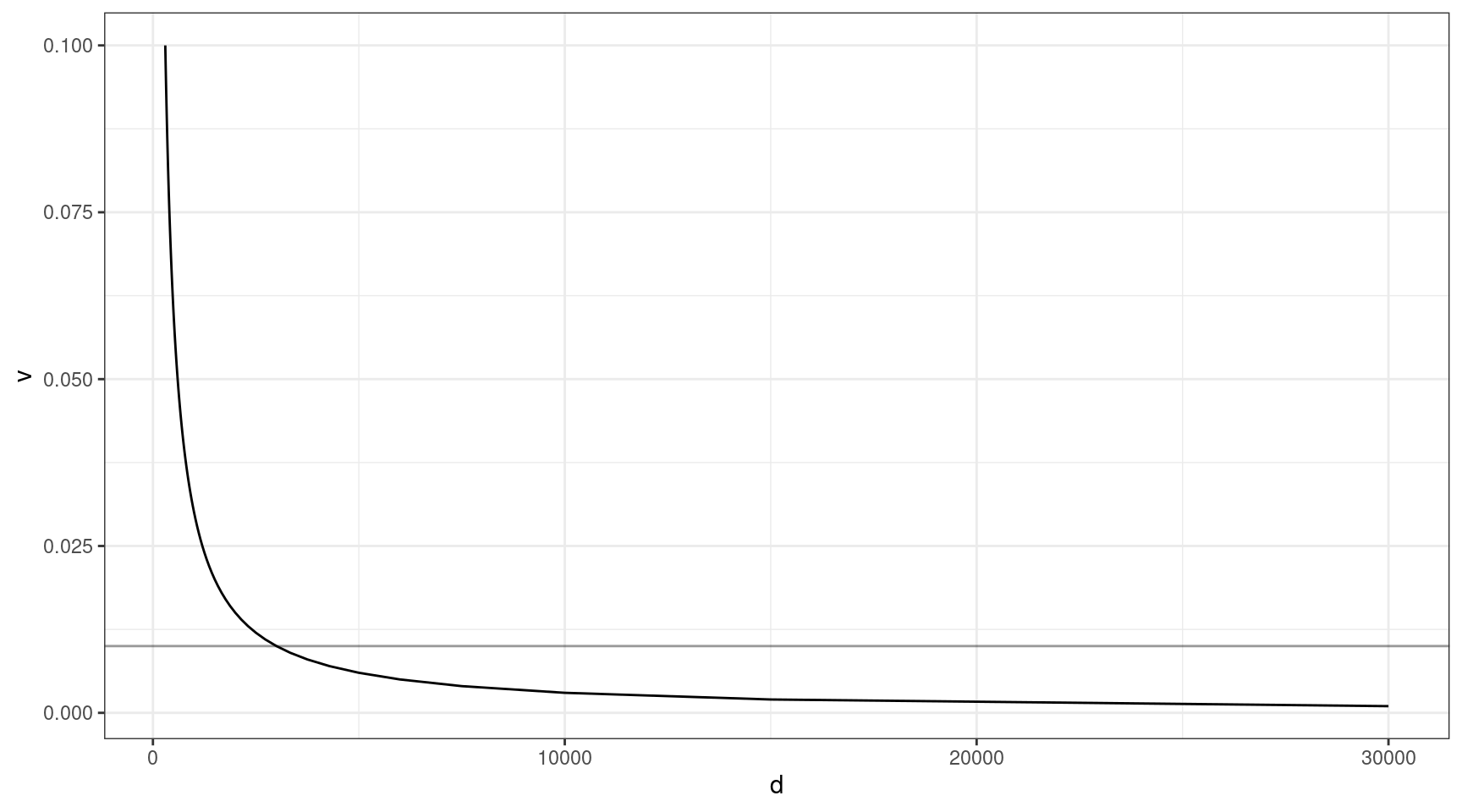

Coverage

The above thought experiment won’t tell us the probability of sequencing the variant, as sequencing involves a whole host of other factors. Coverage is one of the most important. Given that approximately 30x raw coverage is required to yield 1x of high quality duplex coverage, we can look at the relationship between coverage and VAF (need the variant to be at least 1x to detect).

Here we can see that at VAF = 0.01 (line), we need to sequence to approx 3000x, and this gets exponentially deeper as we go down in target VAF.

v = seq(0.001, 0.1, 0.001)

ccov <- data.frame(d = 30 / v,

v = v)

ggplot(ccov, aes(d, v)) +

geom_line() +

theme_bw() +

geom_hline(yintercept=0.01, alpha=0.4)

| Version | Author | Date |

|---|---|---|

| cde303e | Marek Cmero | 2022-02-17 |

Mixture experiments

How much total coverage will we need, in order to detect a mixture of \(X\%?\) We define this as successfully calling at least 50% of the variants at VAF \(v = \{0.1, 0.01, 0.001\}\) with at least one supporting duplex read. We assume our duplex yield is 30 (i.e., 30x coverage yields 1x duplex coverage).

Our binomial calculation takes the form of:

\(P(Bin(n, v)) = 0)\) = 0.5 where \(n =\) duplex coverage.

df <- NULL

min_reads <- 1

duplex_yield <- 30

duplex_seq_range <- seq(1, 10000, 1)

fraction_we_can_miss <- 0.5

for(vaf in c(0.1, 0.01, 0.001)) {

tmp <- data.frame(

pval=pbinom(min_reads - 1, duplex_seq_range, vaf),

vaf=vaf,

duplex_cov=duplex_seq_range,

total_cov=duplex_seq_range * duplex_yield

)

tmp <- tmp[tmp$pval < fraction_we_can_miss,]

tmp <- tmp[tmp$pval == max(tmp$pval),]

df <- rbind(df, tmp)

}

print(df) pval vaf duplex_cov total_cov

7 0.4782969 0.100 7 210

69 0.4998370 0.010 69 2070

693 0.4999002 0.001 693 20790Revised model

Attempting to combine both coverage and cell input, we can take the following formula:

\((1 - v) ^ {(n * c * d)} = p\)

Where \(v\) = target VAF, \(n\) = number of input cells, \(c\) = coverage per input cell, \(d\) = duplex efficiency and \(p\) = probability of missing a variant.

We set:

- \(c = 10\) (coverage we are aiming for for each genome equivalent)

- \(d = 0.057\) (average efficiency for NanoSeq MB2)

- \(p = 0.05\) (fraction of variants we are okay to miss)

- \(v = \{0.001, 0.01, 0.05, 0.1\}\) (target VAFs)

- \(n = \{100, 200..10000\}\) (range of input cells)

We also need to check whether the number of genome equivalents we take forward for sequencing exceeds the lane capacity (600GB). Our sequenced bases will be:

\(b = g * c * n\) where \(g\) = genome size.

lane_cap <- 6e11

g <- 5e6

pmiss <- 0.05

c <- 8

e <- 1/ 30

vafs <- c(0.001, 0.01, 0.05, 0.1)

n <- seq(100, 12000, 100)

# calculate duplex coverage

dcov <- n * c * e

# calculate total sequenced bases

bases <- g * c * n

probs <- NULL

min_cells <- NULL

for(vaf in vafs) {

prob <- data.frame(ncells = n,

dcov = dcov,

p = pbinom(0, round(dcov), vaf),

vaf = vaf,

bases = bases,

within_capacity = bases < lane_cap)

tmp <- prob[prob$p < pmiss,]

tmp <- tmp[tmp$p == max(tmp$p),]

min_cells <- rbind(tmp, min_cells)

probs <- rbind(probs, prob)

}

print(min_cells) ncells dcov p vaf bases within_capacity

2 200 53.33333 0.003757102 0.100 8.00e+09 TRUE

3 300 80.00000 0.016515374 0.050 1.20e+10 TRUE

12 1200 320.00000 0.040110887 0.010 4.80e+10 TRUE

113 11300 3013.33333 0.049069996 0.001 4.52e+11 TRUEggplot(probs, aes(ncells, p, colour = factor(vaf))) +

geom_point() +

theme_minimal() +

geom_hline(yintercept = pmiss, alpha=0.4) +

scale_color_brewer(palette = 'Dark2')

| Version | Author | Date |

|---|---|---|

| ede1103 | Marek Cmero | 2022-08-11 |

Sequencing depth and capture size

Example statistics table for 1% and 0.1% VAF experiments for different capture sizes (2kb, 10kb, 5Mb and 36.8Mb (human exome size).

lane_cap <- 6e11

capture_sizes <- c(2000, 10000, 5e6, 3.68e7, 4e7)

chance_to_miss <- 0.05

target_cov <- 8

efficiency <- 1 / 30 # conservative estimate

ploidy <- 2

readlen <- 300

ncells <- c(5, seq(200, 6000, 10))

vafs <- c(0.01, 0.05, 0.1)

df_full <- NULL

for (capture_size in capture_sizes) {

for (vaf in vafs) {

df <- NULL

for (ncell in ncells) {

dna_depth <- ncell * ploidy

dna_input <- capture_size * dna_depth

seq_bases <- dna_input * target_cov

tot_cov <- dna_depth * target_cov

dup_cov <- tot_cov * efficiency

tmp <- data.frame(ncell = ncell,

vaf = vaf,

tot_cov = tot_cov,

dup_cov = dup_cov,

seq_megabases = seq_bases / 1e6,

million_reads = seq_bases / readlen / 1e6,

capture_size_kb = capture_size / 1e3,

chance_to_miss = pbinom(0, round(dup_cov), vaf),

lane_fraction = seq_bases / lane_cap,

max_samples_per_lane = as.integer(1 / (seq_bases / lane_cap)))

df <- rbind(df, tmp)

}

df <- df[df$chance_to_miss < chance_to_miss,]

df <- df[df$chance_to_miss == max(df$chance_to_miss),]

df_full <- rbind(df_full, df)

}

}

print(df_full[order(df_full$vaf, decreasing = TRUE),]) ncell vaf tot_cov dup_cov seq_megabases million_reads capture_size_kb

21 200 0.10 3200 106.6667 6.40 2.133333e-02 2

23 200 0.10 3200 106.6667 32.00 1.066667e-01 10

25 200 0.10 3200 106.6667 16000.00 5.333333e+01 5000

27 200 0.10 3200 106.6667 117760.00 3.925333e+02 36800

29 200 0.10 3200 106.6667 128000.00 4.266667e+02 40000

2 200 0.05 3200 106.6667 6.40 2.133333e-02 2

22 200 0.05 3200 106.6667 32.00 1.066667e-01 10

24 200 0.05 3200 106.6667 16000.00 5.333333e+01 5000

26 200 0.05 3200 106.6667 117760.00 3.925333e+02 36800

28 200 0.05 3200 106.6667 128000.00 4.266667e+02 40000

38 560 0.01 8960 298.6667 17.92 5.973333e-02 2

381 560 0.01 8960 298.6667 89.60 2.986667e-01 10

382 560 0.01 8960 298.6667 44800.00 1.493333e+02 5000

383 560 0.01 8960 298.6667 329728.00 1.099093e+03 36800

384 560 0.01 8960 298.6667 358400.00 1.194667e+03 40000

chance_to_miss lane_fraction max_samples_per_lane

21 1.270423e-05 1.066667e-05 93750

23 1.270423e-05 5.333333e-05 18750

25 1.270423e-05 2.666667e-02 37

27 1.270423e-05 1.962667e-01 5

29 1.270423e-05 2.133333e-01 4

2 4.134526e-03 1.066667e-05 93750

22 4.134526e-03 5.333333e-05 18750

24 4.134526e-03 2.666667e-02 37

26 4.134526e-03 1.962667e-01 5

28 4.134526e-03 2.133333e-01 4

38 4.953626e-02 2.986667e-05 33482

381 4.953626e-02 1.493333e-04 6696

382 4.953626e-02 7.466667e-02 13

383 4.953626e-02 5.495467e-01 1

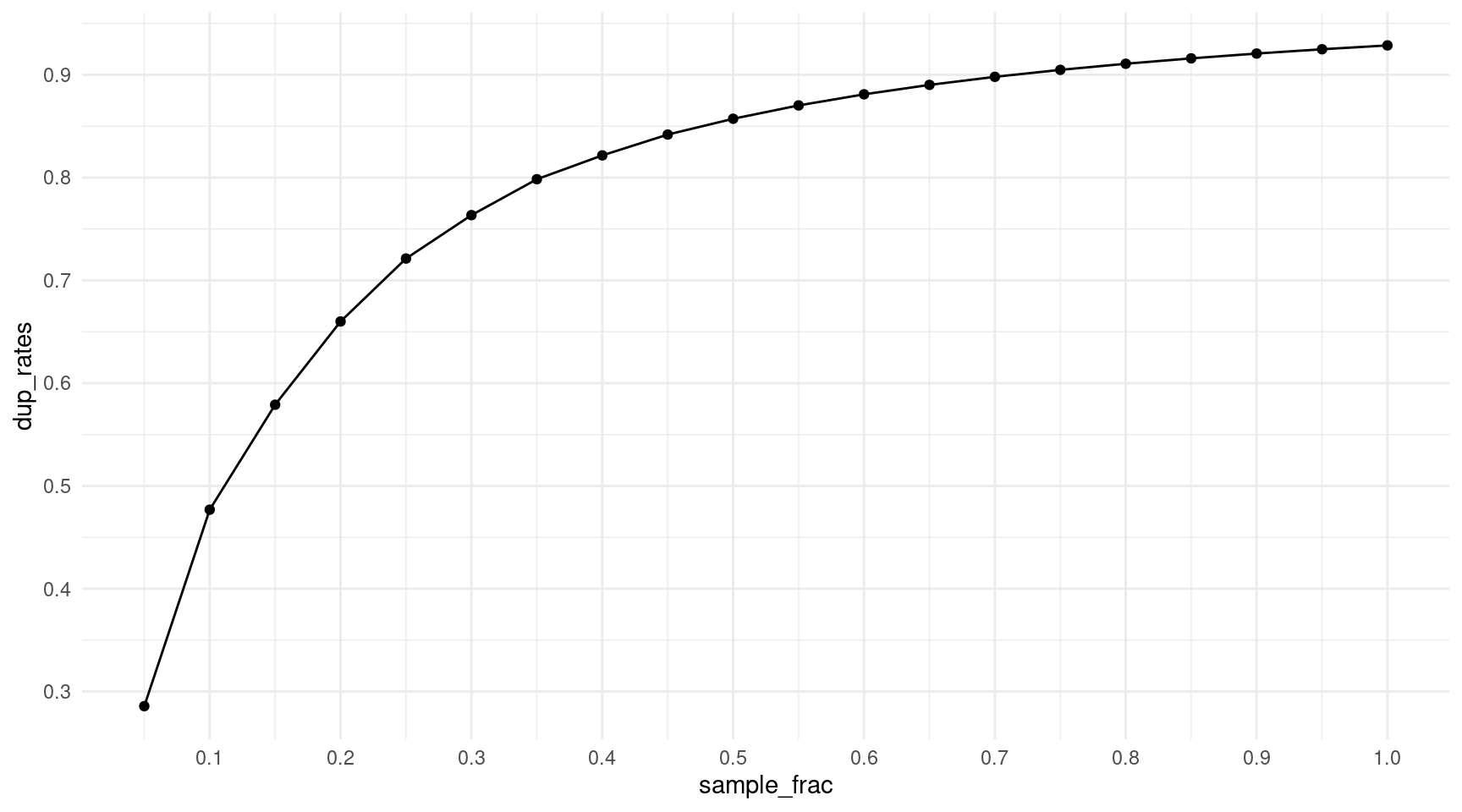

384 4.953626e-02 5.973333e-01 1Downsampling experiment model

How does the duplicate rate behave when we downsample reads? We can use the rough stats of the 1% ecoli spike-in to check for sensible downsampling fractions. This sample has roughly 340m duplex reads and an average family size of 14 (each read is sequenced on average 14x). We use a poisson distribution to generate fake reads, and then down sample from 5% to 95%. We then calculate the duplicate rate.

N_READS <- 340

COV_RATIO <- 14

# recursive function, times = number of letters in toy reads

make_toy_reads <- function(times, toy_reads=NULL) {

if (times < 1) {

return(toy_reads)

} else if (is.null(toy_reads)) {

toy_reads <- LETTERS

times <- times - 1

} else {

toy_reads <- lapply(toy_reads, paste0, LETTERS) %>% unlist()

times <- times - 1

}

make_toy_reads(times, toy_reads)

}

toy_reads <- make_toy_reads(2) %>% head(N_READS)

all_reads <- rep(toy_reads, rpois(N_READS, COV_RATIO))

dup_rate <- 1 - (N_READS / length(all_reads))

print(paste('Max duplicate rate:', round(dup_rate, 2)))[1] "Max duplicate rate: 0.93"sample_frac <- seq(0.05, 1, 0.05)

n_sample <- round(sample_frac * length(all_reads))

all_samples <- lapply(n_sample, function(n){sample(all_reads, n)})

dup_rates <- lapply(all_samples, function(s){1 - (length(unique(s)) / length(s))}) %>% unlist()

df <- data.frame(sample_frac, dup_rates)

ggplot(df, aes(sample_frac, dup_rates)) +

geom_point() +

geom_line() +

theme_minimal() +

scale_x_continuous(breaks = seq(0.1, 1, 0.1)) +

scale_y_continuous(breaks = seq(0.1, 1, 0.1))

| Version | Author | Date |

|---|---|---|

| ede1103 | Marek Cmero | 2022-08-11 |

sessionInfo()R version 4.2.0 (2022-04-22)

Platform: x86_64-pc-linux-gnu (64-bit)

Running under: CentOS Linux 7 (Core)

Matrix products: default

BLAS: /stornext/System/data/apps/R/R-4.2.0/lib64/R/lib/libRblas.so

LAPACK: /stornext/System/data/apps/R/R-4.2.0/lib64/R/lib/libRlapack.so

locale:

[1] LC_CTYPE=en_US.UTF-8 LC_NUMERIC=C

[3] LC_TIME=en_US.UTF-8 LC_COLLATE=en_US.UTF-8

[5] LC_MONETARY=en_US.UTF-8 LC_MESSAGES=en_US.UTF-8

[7] LC_PAPER=en_US.UTF-8 LC_NAME=C

[9] LC_ADDRESS=C LC_TELEPHONE=C

[11] LC_MEASUREMENT=en_US.UTF-8 LC_IDENTIFICATION=C

attached base packages:

[1] stats graphics grDevices utils datasets methods base

other attached packages:

[1] dplyr_1.1.0 ggplot2_3.3.6 workflowr_1.7.0

loaded via a namespace (and not attached):

[1] Rcpp_1.0.9 RColorBrewer_1.1-3 highr_0.9 compiler_4.2.0

[5] pillar_1.8.1 bslib_0.4.0 later_1.3.0 git2r_0.31.0

[9] jquerylib_0.1.4 tools_4.2.0 getPass_0.2-2 digest_0.6.30

[13] gtable_0.3.1 jsonlite_1.8.3 evaluate_0.17 lifecycle_1.0.3

[17] tibble_3.1.8 pkgconfig_2.0.3 rlang_1.0.6 cli_3.4.1

[21] rstudioapi_0.14 yaml_2.3.5 xfun_0.33 fastmap_1.1.0

[25] withr_2.5.0 httr_1.4.4 stringr_1.5.0 knitr_1.40

[29] generics_0.1.3 fs_1.5.2 vctrs_0.5.2 sass_0.4.2

[33] tidyselect_1.2.0 grid_4.2.0 rprojroot_2.0.3 glue_1.6.2

[37] R6_2.5.1 processx_3.7.0 fansi_1.0.3 rmarkdown_2.16

[41] farver_2.1.1 callr_3.7.2 magrittr_2.0.3 whisker_0.4

[45] scales_1.2.1 ps_1.7.1 promises_1.2.0.1 htmltools_0.5.3

[49] colorspace_2.0-3 httpuv_1.6.6 labeling_0.4.2 utf8_1.2.2

[53] stringi_1.7.8 munsell_0.5.0 cachem_1.0.6